Part 2: Execute a simple ML model

The previous part showed the main concepts of the platform and how to use the basics of the SDK. With what you already know you are able to execute really simple pipelines. But in order to build more realistic applications, using more complex code on your data with dependencies such as Python libraries, it is needed to learn more advanced functionnalities and especially how to configure the execution context of a pipeline and how to retrieve data stored on the platform.

This page will present the same commands as the previous ones going through more available functionalities offered by the platform, with a real Machine Learning use case. We will improve this Machine Learning application later in Part 3 and Part 4.

You can find all the code used in this part and its structure here.

Prerequisites

- Python 3.9 or higher is required to be installed on your computer.

- Have done the Part 1: Execute a simple pipeline.

Overview of the use case

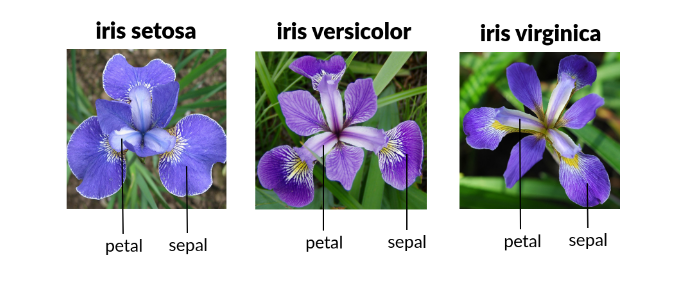

We will build a pipeline to train and store a simple ML model with the iris dataset. The iris dataset describes four features (petal length, petal width, sepal length, sepal width) from three different types of irises (Setosa, Veriscolour, Virginica).

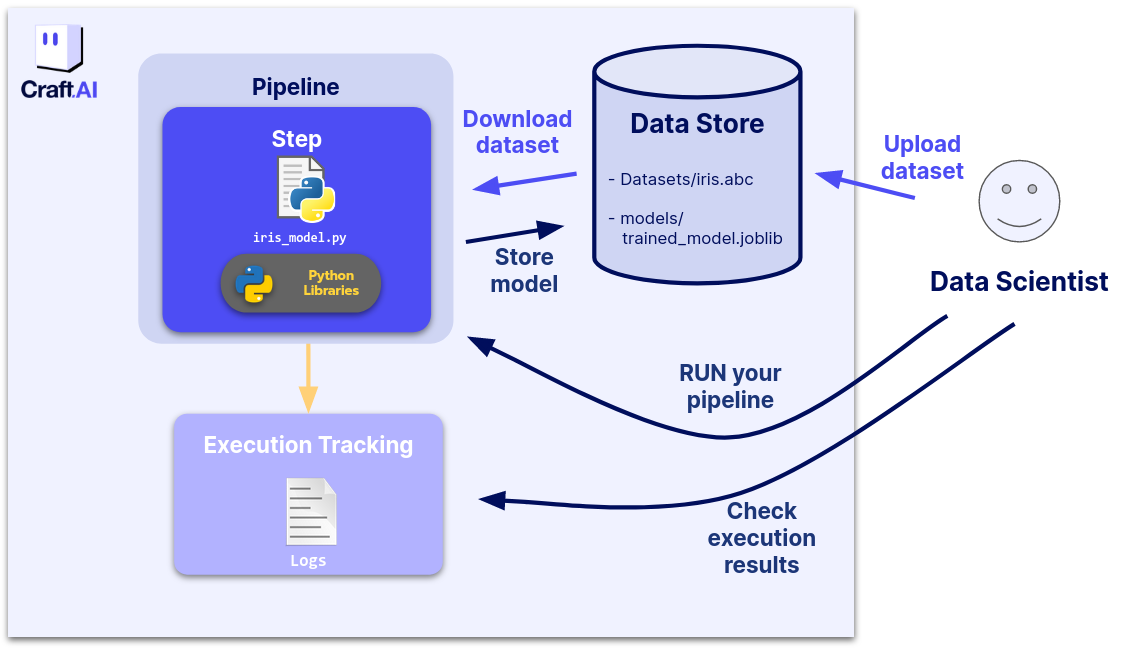

The goal of our application is to classify flower type based on the previous four features. In this part we will start our new use case by retrieving the iris dataset from the Data Store (that we will introduce just below), building a pipeline to train a simple ML model on the dataset and store it on the Data Store.

Storing data on the platform

The Data Store is a file storage on which you can upload and download unlimited files and organize them as you want using the SDK. All your pipelines can download and upload files from and to the Data Store.

Pushing the iris dataset to the Data Store:

In our case the first thing we want to do is to upload the iris dataset to the Data Store.

You can do so with the upload_data_store_object function from the SDK with this code in the terminal:

from io import BytesIO

from sklearn import datasets

import pandas as pd

iris = datasets.load_iris(as_frame=True)

iris_df = pd.concat([iris.data, iris.target], axis=1)

file_buffer = BytesIO(iris_df.to_parquet())

sdk.upload_data_store_object(

filepath_or_buffer=file_buffer,

object_path_in_datastore="get_started/dataset/iris.parquet"

)

The argument filepath_or_buffer can be a string or a file-like object.

If a string, it is the path to the file to be uploaded, if a file-like object you have to pass an IO object (something you don't write to the disk but stay in the memory). Here we choose to use a BytesIO object.

Source code for model training

We will use the following code that trains a sklearn KNN classifier on the iris dataset from the Data Store and put the trained model on the Data Store. Run this code in the terminal to get all informations in the Data Store.

import joblib

import numpy as np

import pandas as pd

from sklearn.neighbors import KNeighborsClassifier

from craft_ai_sdk import CraftAiSdk

def trainIris():

sdk = CraftAiSdk()

sdk.download_data_store_object(

object_path_in_datastore="get_started/dataset/iris.parquet",

filepath_or_buffer="iris.parquet",

)

dataset_df = pd.read_parquet("iris.parquet")

X = dataset_df.loc[:, dataset_df.columns != "target"].values

y = dataset_df.loc[:, "target"].values

np.random.seed(0)

indices = np.random.permutation(len(X))

n_train_samples = int(0.8 * len(X))

train_indices = indices[:n_train_samples]

val_indices = indices[n_train_samples:]

X_train = X[train_indices]

y_train = y[train_indices]

X_val = X[val_indices]

y_val = y[val_indices]

knn = KNeighborsClassifier()

knn.fit(X_train, y_train)

mean_accuracy = knn.score(X_val, y_val)

print("Mean accuracy:", mean_accuracy)

joblib.dump(knn, "iris_knn_model.joblib")

sdk.upload_data_store_object(

"iris_knn_model.joblib", "get_started/models/iris_knn_model.joblib"

)

Advanced pipeline configuration

To be sure that all the mandatory librairies are installed with the correct version on our pipeline, we are going to use a requirement.txt file listing all the informations.

We will then use the requirements_path parameter in the container_config to specifiy its access path. In this tutorial, we choose to store it in the src folder for easier management.

craft_ai_sdk==xx.xx.xx

joblib==xx.xx.xx

numpy==xx.xx.xx

scikit_learn==xx.xx.xx

pandas==xx.xx.xx

pyarrow==xx.xx.xx

Tip

Example with up-to-date version numbers available here.

Tip

You can set the default path for this file in the Libraries & Packages section of your project settings using the web interface. All pipelines created in this project will then use this path by default.

It may also be useful to describe precisely the pipelines created to be able to understand their purpose afterward. To do so, you can fill in the description parameter during the pipeline creation.

To create this new pipeline, please run this code in the terminal :

sdk.create_pipeline(

pipeline_name="part-2-iristrain",

function_path="src/part-2-iris-train.py",

function_name="trainIris",

description="This function creates a classifier model for iris",

container_config = {

"requirements_path" : "requirements.txt", #put here the path to the requirements.txt

"local_folder": ".../get_started", # Enter the path to your local folder here

}

)

To go further with pipeline creation

If you want to create a pipeline based on the code of a Git repository, you can check this page.

Success

🎉 You can now execute a more realistic Machine Learning pipeline.

Now that we can have more complex code in our pipelines and we know how to parametrize the execution context of our pipelines, we would like to be able to give it input elements to vary the result and receive the result easily. For this, we can use the input/output feature offered by the platform.

Next step: Part 3: Execute with input and output